Attention Is All You Need Explained

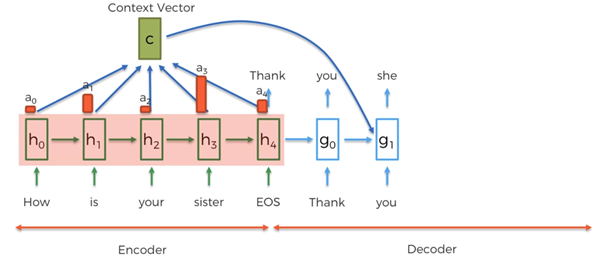

Previously rnns were regarded as the go to architecture for translation.

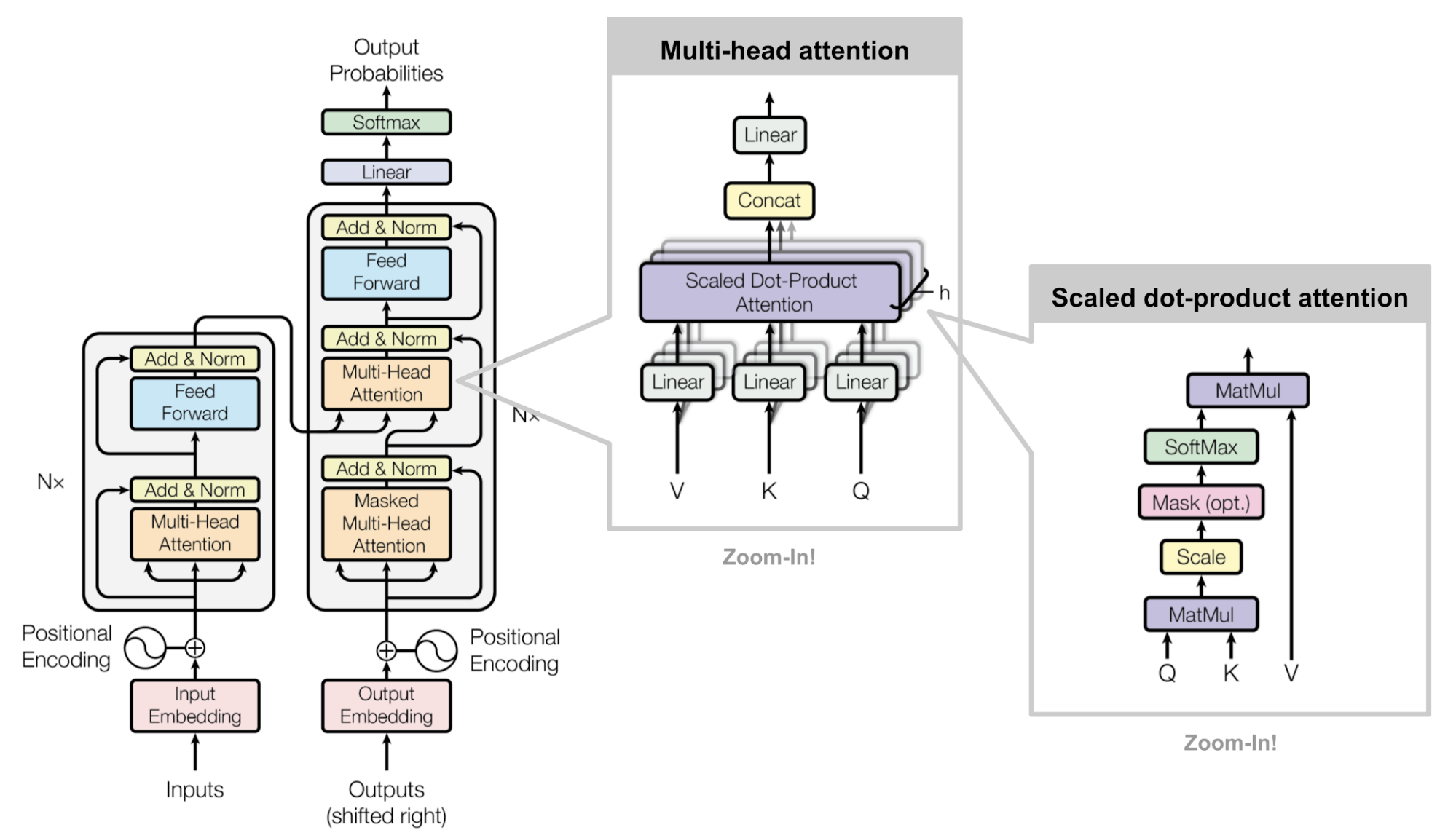

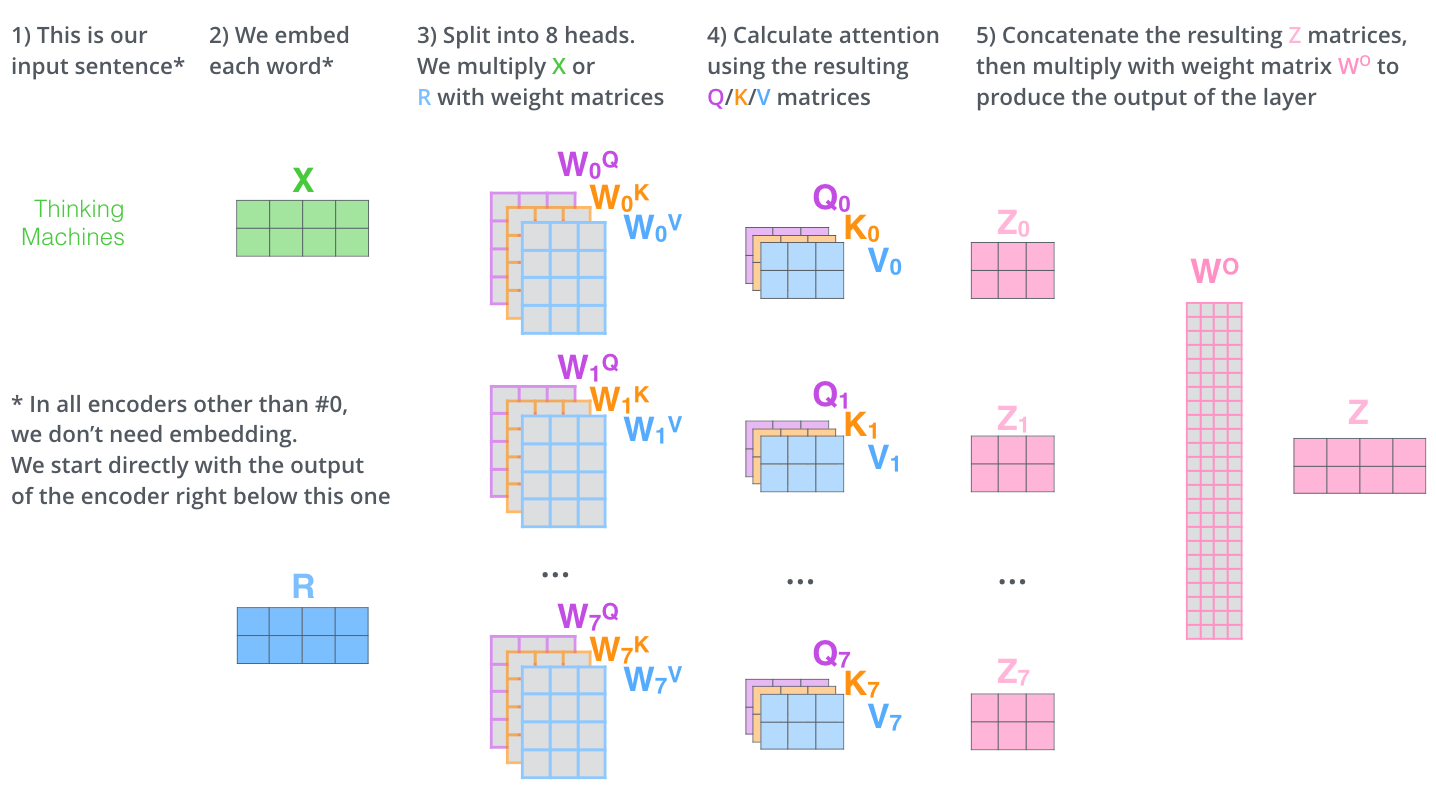

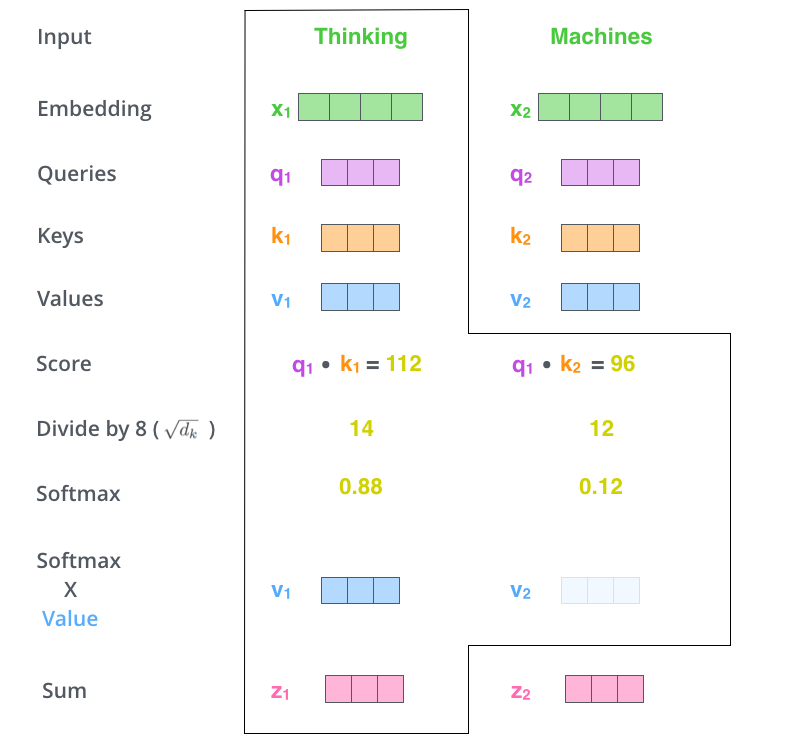

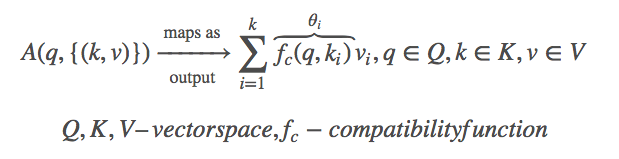

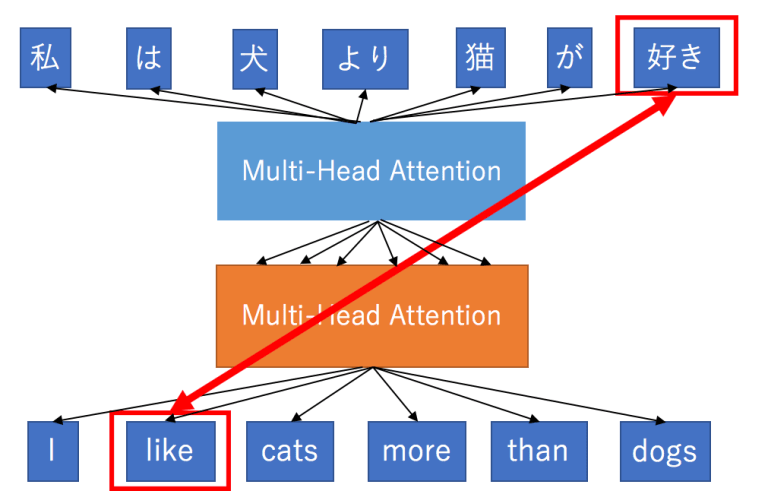

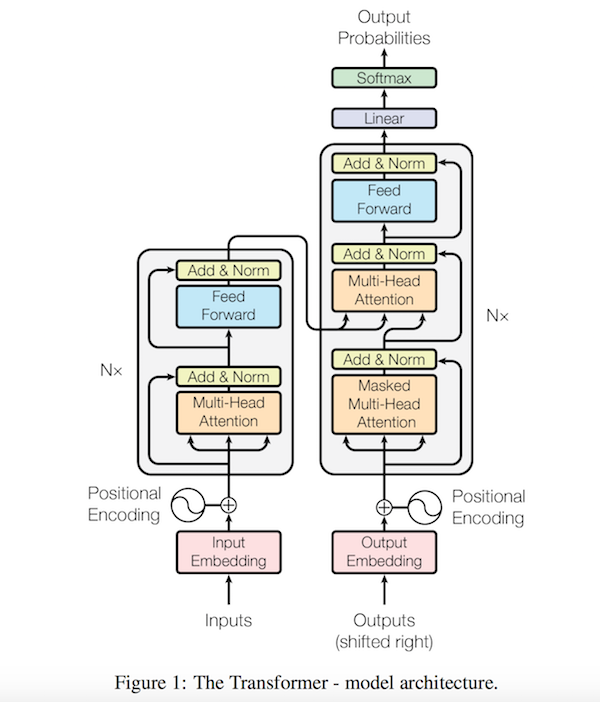

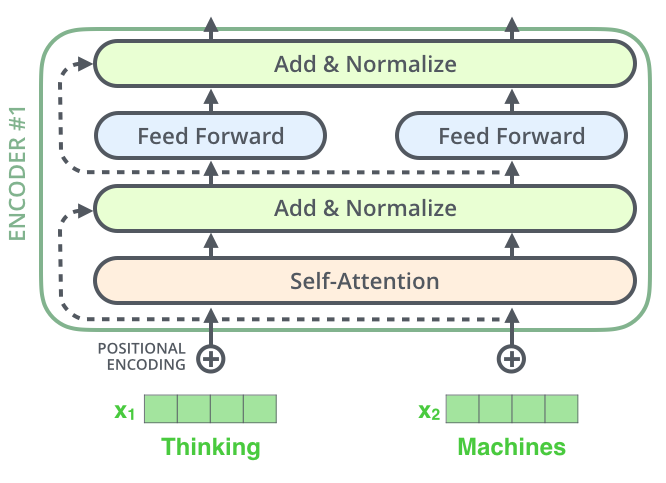

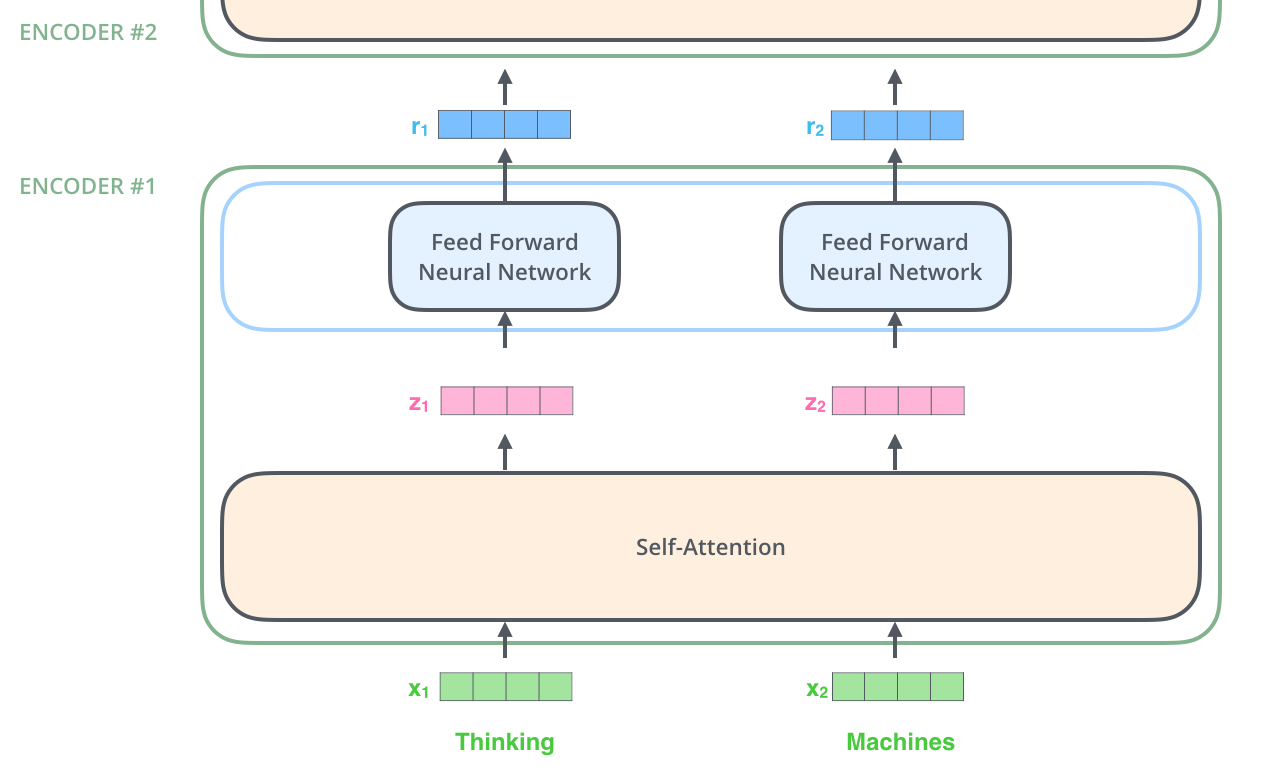

Attention is all you need explained. An attention function can be described as mapping a query and a set of key value pairs to an output where the query keys values and output are all vectors. In june 2017 google s machine translation team published a paper at arxiv entitled attention is all you need which attracted the attention of the industry. The output is computed as a weighted sum. The paper itself is very clearly written but the conventional wisdom has been that it is quite difficult to implement correctly.

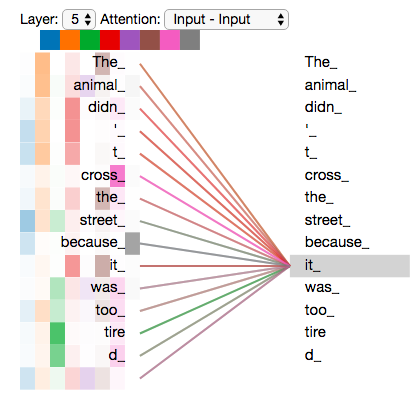

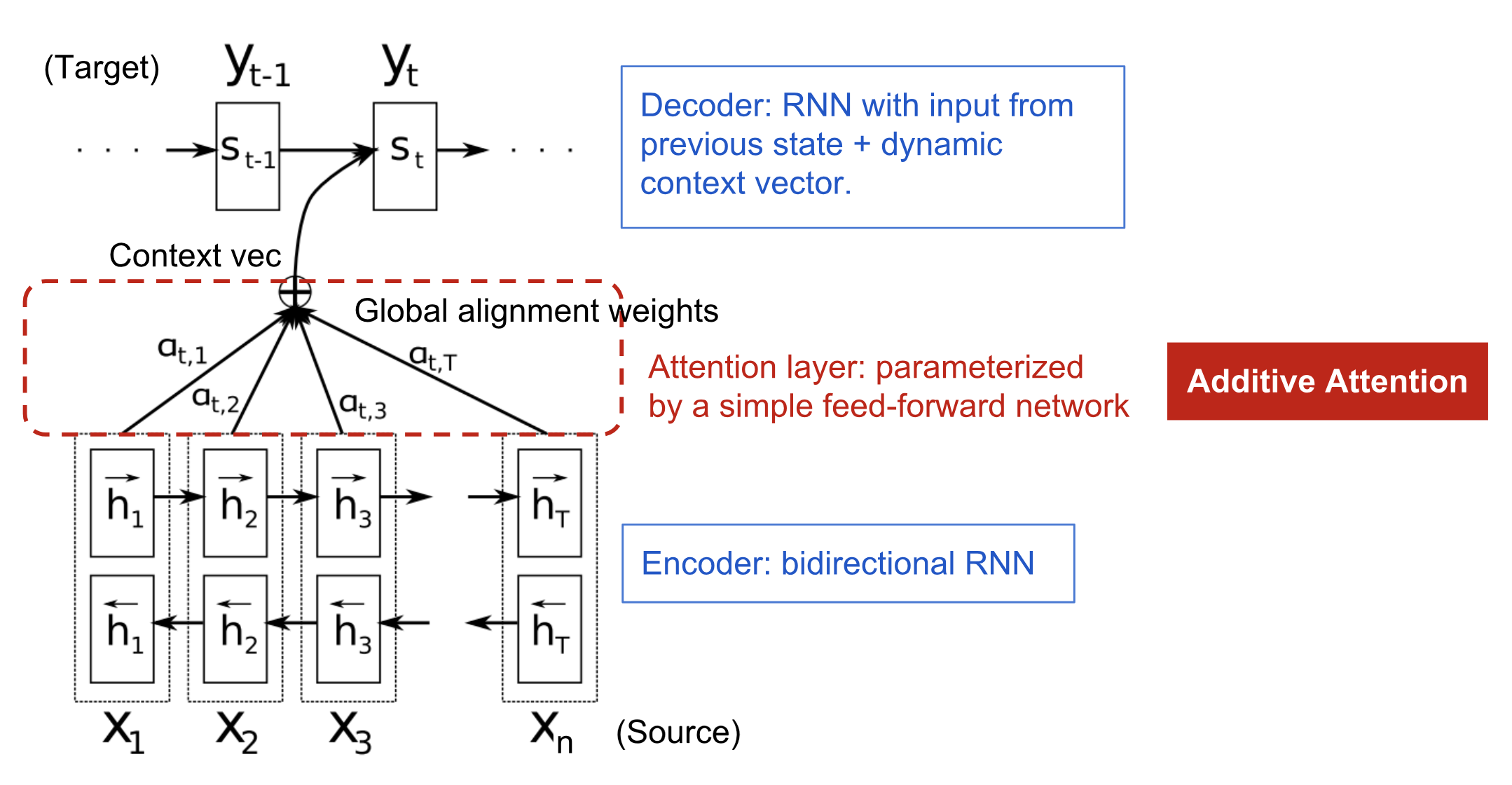

Besides producing major improvements in translation quality it provides a new architecture for many other nlp tasks. Gomez lukasz kaiser illia polosukhin the dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder decoder configuration. Self attention mechanisms became a. Transformer neural networks explained.

트랜스포머 어텐션 이즈 올 유 니드 duration. Attention is all you need ashish vaswani noam shazeer niki parmar jakob uszkoreit llion jones aidan n. Attention is all you need is an influential paper with a catchy title that fundamentally changed the field of machine translation. Attention is all you need duration.